EmbeddedSesameEngine

Here, we describe the EmbeddedSesameEngine, a Linked Data Engine that uses the Sesame library to automatically fill an embedded Sesame triple store for storing and querying Linked Data.

Contents

- 1 Query execution

- 2 Crawling Strategy

- 3 Validation of data

- 4 Entity Consolitation

- 4.1 Problem: Differing hierarchies of gesis:geo and estatwrap:geo

- 4.2 Problem: For Convert-Cube, I do not want Entity Consolidation since this would make the evaluation more complex. For Drill-Across, I want Entity Consolidation. How to distinguish?

- 4.3 Problem: What about diced dimensions that are not contained in one cube?

- 4.4 New Experiment as in ISEM paper

- 5 New experiments: DrillAcross GdpEmployment

- 6 LogicalToPhysical Query Plan Strategy

- 7 Default Measure Strategies

Query execution

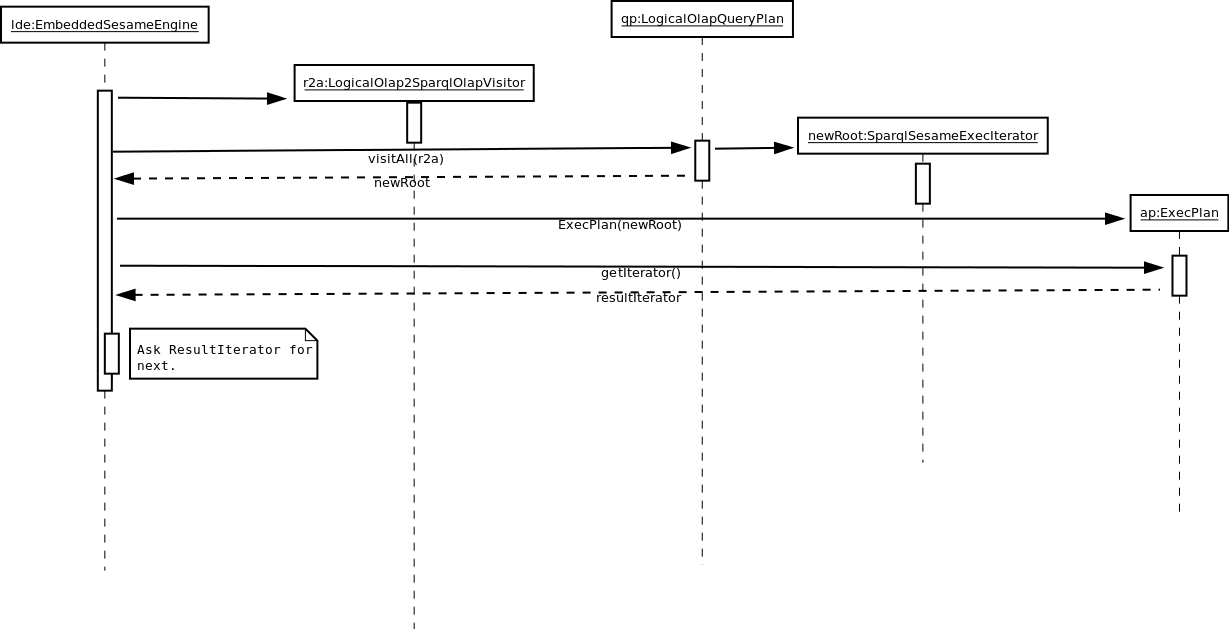

EmbeddedSesameEngine evaluates metadata queries using SPARQL templates filled with Node parameters. For each multidimen- sional element, there are several SPARQL templates for different ways of modelling, e.g., measures can define their own aggregation functions or AV G and COUNT are used by default. To evaluate analytical queries, the logical query plan is translated to a physical query plan; for each separate drill-across sub-query plan, we execute our OLAP-to-SPARQL algorithm [KH13] and join the results.

Implementation of Query Strategy

Crawling Strategy

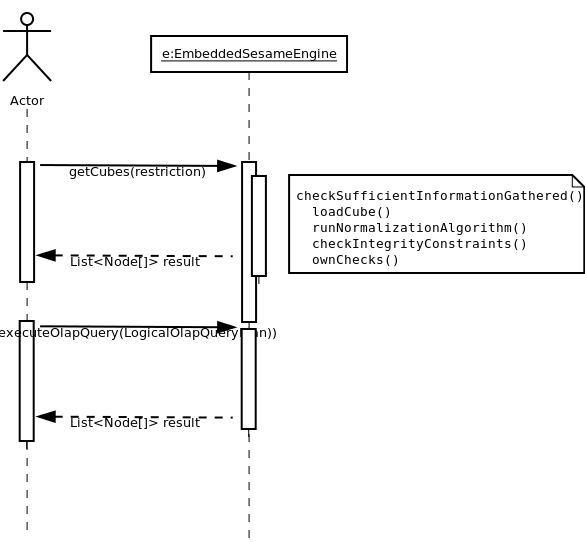

Before executing a metadata or analytical query with SPARQL, the EmbeddedSesameEngine automatically loads necessary data into an embedded Sesame RDF store.

EmbeddedSesameEngine first resolves all queried dataset URIs and loads the RDF into an embedded Sesame RDF store. Then, the engine in turn asks SPARQL queries to its store for additional URIs to resolve and load; EmbeddedSesameEngine resolves all instances of concepts defined in the QB specification in the order they can be reached from the dataset URI, from qb:DataStructureDefinitions over qb:ComponentProperty to single qb:Concepts. Since there is no standard way to publish QB observations, the engine assumes that the observations are represented as blank nodes and stored at the location of the dataset URI. Such “directed crawling” of the data cube has the advantage that necessary data is found quickly and not all information has to be given in one location, but can be distributed and reused, e.g., the range for the ical:dtstart dimension is provided by its URI.

Implementation of crawling strategy

- When is data added to the internal triple store?

- getCubes, getDimensions etc. is calling checkSufficientInformationGathered(restrictions).

- If a cube is queried for, we check whether we have loaded the data for the cube.

- If YES: Nothing needs to be done, since we can assume that all data for the cube is already loaded.

- If NOT: We load all data for the cube. We run normalization algorithm. We run integrity constraints.

- There can be technical problems such as a malformed URL given. Those should be catched and a summary of problems thrown to the user.

- There can be conceptual problems such as a data cube failing on integrity constraints. Those should also be catched and a summary of problems thrown to the user.

- Just as Metadata Queries getCubes() etc. and OLAP Queries executeOlapQuery() etc., we throw an OlapException when something goes wrong.

- When is data removed from the internal triple store?

- This is done via rollback(), via Olap4ldConnection.rollback().

- Every time an Olap4ldCatalog is asked getSchemas(), we rollback the connection.

- This issue may be a useful to look into to improve performance.

- For now, we have disabled rollback for performance reasons.

- How do we load all data for the cube?

- Collecting data: The EmbeddedSesameEngine accesses the Olap4ld Metadata Schema of Data Cubes via the URI of a Data Cube and by first resolving the Data Cube, then after querying for the DataStructureDefinition, the Data Cube Schema. We assume not to have slices or information included in other multidimensional elements.

Validation of data

ESE ensures that the QB dataset is well-formed according to the QB specification: a QB normalisation algorithm using SPARQL Update queries materialises information only implicitly given in a QB dataset and thus simplifies some metadata queries; QB integrity constraint checks using SPARQL ASK queries ensure that consequent metadata and OLAP queries on the dataset can be successfully evaluated.

Some integrity constraints require to go through all observations, e.g., when checking that no two observations in the same qb:DataSet may have the same value for all dimensions (IC-12). Therefore, we allow to set a maximal number of triples for which those more complex integrity constraints are checked.

Note, we currently load anew the entire dataset for every query. This is because we expect that in theory data changes frequently in the original source and because we do not want to fill the repository with data that may not be needed, anymore. Since historic data often does not change and different users possibly issue similar queries, heuristics-based performance optimisations would be possible.

Entity Consolitation

ESE now also does Entity consolidation to allow querying a global cube.

The respective test case is Example_QB_Datasets_QueryTest.testDrillAcrossUnemploymentFearAndEmploymentRateGermanyExampleDatasets() and testDrillAcrossUnemploymentFearAndEmploymentRateGermany().

Example Drill-Across query over GESIS Employment Fear and Estatwrap Employment Rate

For that, we manually added owl:sameAs relationships to ESE.

Problem: Differing hierarchies of gesis:geo and estatwrap:geo

- If I do "reasoning" or entity consolidation,

- Experiment: I want to filter for Germany. The problem is the hierarchies / levels.

- What I want:

- Problem: If I do reasoning, then geo does not have any members anymore.

- Solution: If I replace the unique name by a canonical value, I still need to use all possible original URIs.

- We use the unique names (=canonical values) in the following situations:

- When evaluating analytical operators (Slice,...) => Here, we intend to issue the correct query to the respective dataset.

- When issuing metadata queries (getHierarchies(restrictions)), we create filters => Here, we intend to extend the filter criteria by all possible URIs from the quivalence class.

Execute logical query plan: Drill-across(

Rollup (Slice (Dice (Projection (BaseCube (http://olap4ld.googlecode.com/git/OLAP4LD-trunk/tests/estatwrap/tec00114_ds.rdf#ds), {http://purl.org/linked-data/sdmx/2009/measure#obsValueAGGFUNCAVG, http://purl.org/linked-data/sdmx/2009/measure#obsValuehttp://olap4ld.googlecode.com/git/OLAP4LD-trunk/tests/estatwrap/tec00114_ds.rdf#dsAGGFUNCSUM}), (http://lod.gesis.org/lodpilot/ALLBUS/vocab.rdf#geo = http://lod.gesis.org/lodpilot/ALLBUS/geo.rdf#00)), {http://ontologycentral.com/2009/01/eurostat/ns#aggreg95, http://lod.gesis.org/lodpilot/ALLBUS/vocab.rdf#geo, http://ontologycentral.com/2009/01/eurostat/ns#indic_na}), {}),

Rollup (Slice (Dice (Projection (BaseCube (http://lod.gesis.org/lodpilot/ALLBUS/ZA4570v590.rdf#ds), {http://purl.org/linked-data/sdmx/2009/measure#obsValueAGGFUNCAVG, http://purl.org/linked-data/sdmx/2009/measure#obsValuehttp://lod.gesis.org/lodpilot/ALLBUS/ZA4570v590.rdf#dsAGGFUNCSUM}), (http://lod.gesis.org/lodpilot/ALLBUS/vocab.rdf#geo = http://lod.gesis.org/lodpilot/ALLBUS/geo.rdf#00)), {http://lod.gesis.org/lodpilot/ALLBUS/vocab.rdf#geo, http://lod.gesis.org/lodpilot/ALLBUS/vocab.rdf#variable}), {}))

The problem is that for estatwrap, gesis:geo = gesis-geo:00 will not work.

Ok, now we have several possibilities:

Possibility 1) We can change the initial query plan to use the right unique names. In this case, we would not need to change OLAP-to-SPARQL. However, we do not know how to create the right initial query plan, since how do we know which dimension from the dataset is referring to the canonical value? The canonical values are organised in Linked Data Engine. There are two different things: 1) The single datasets metadata as given by Linked Data Engine and 2) the global dataset as given by the Linked Data Engine. The query to the global dataset is never represented as a logical query plan. Instead, we have an adapted MDX query schema: one or more queried data cubes, lists of member combinations from column, row and filter axes.

- The initial query plan looks like: For every queried data cube, Base-Cube; for the chosen measures of the dataset (direct comparison of unique names) or if no measure is chosen, the first measure of the dataset, Projection; for every possible combination of members of the dataset (direct comparison of unique names, which may be canonical values) on axes, Dice; for every dimension of the dataset (direct comparison of unique names, which may be canonical values) not mentioned in either column or row axis, Slice; and for any higher level of the dataset (again, direct comparison, considering consolidation) slected on columns or rows axes, Roll-Up. Finally, all resulting data cubes are joined via Drill-Across.

Note, we should always create correct initial query plans for separate datasets. If resulting data cubes do not have the same structure, we should throw an error at Drill-Across operator (at execution?).

XXX: Test.

Thus, we work on canonical values for building the initial query plans.

Done: A first step would be to "consolidate" dimensions and members, i.e., to only return canonical identifiers.

Done: A second step would be to only use those canonical identifiers (let's make it the first occurence) in MDX queries (so that those identifiers can be found). We also replace identifiers from single datasets.

Done: A third step would be to change the generation of OLAP operators to use the right Linked Data identifiers for the respective dataset. However, how to know the correct identifier? No, we let the initial query plan use the canonical identifiers.

XXX: Evaluate the operators with equivalence classes in mind, i.e., evaluate the consolidation / owl:sameAs with SPARQL queries. The question is how much needs the OLAP-to-SPARQL query to be changed? If I see it correctly, in two situations:

1. For evaluating SlicesRollups, instead of directly using dimension.uri, we set a variable and a Filter with OR of all equivalent URIs of that URI.

2. For evaluating Dices, instead of directly using member.uri, we set a variable and a Filter with OR of all equivalent URIs of that URI.

XXX: A forth step would be to change the Drill-Across operator to consider equivalences. Maybe not needed since the canonical identifiers are used.

XXX: Test all examples.

Possibility 2) We can change the physical query plan to consider all possible unique names. In this case, we would. In ISEM, we used a similar approach when we queried for the data; we tried all possible dimension URIs and member URIs.

Problem: Result from single datasets uses their own identifiers.

Solution: We need to replace the results with the canonical values. Then, Drill-Across does not need to do anything, anymore.

Next steps: Evaluate Dices, properly. Would be close query to ISEM paper query. Then, experiment to evaluate relative query processing times. Unfortunately, no larger scale experiments, probably.

Next steps: Drill-Across queries over 1,2,4,8,16,32 Eurostat datasets? Make visible, how the relative time behaves?

Problem: For Convert-Cube, I do not want Entity Consolidation since this would make the evaluation more complex. For Drill-Across, I want Entity Consolidation. How to distinguish?

Solution: For now, I simply have a static MAGIC variable of EmbeddedSesameEngine that contains the Equivalence Statements and can be filled by any user.

Problem: What about diced dimensions that are not contained in one cube?

Problems:

For {[httpXXX3AXXX2FXXX2FlodYYYgesisYYYorgXXX2FlodpilotXXX2FALLBUSXXX2FgeoYYYrdfXXX2300]} there is no output. Let's see why no results. The solution was a correct equivalence mapping: http://estatwrap.ontologycentral.com/dic/geo#DE

For {[httpXXX3AXXX2FXXX2FlodYYYgesisYYYorgXXX2FlodpilotXXX2FALLBUSXXX2FvariableYYYrdfXXX23v590_1]} there is an error. Probably, since for estatwrap, there is no hierarchy for variable members. Solution: 1) Do not allow, since there is no pendant for estatwrap. 2) Allow, since we dice that dimension, only. The semantics are not correct, though. However, in this case, the results do not make sense. We could compute the employment fear metric first, and then do the Drill-Across....?

For {[httpXXX3AXXX2FXXX2FestatwrapYYYontologycentralYYYcomXXX2FdicXXX2FgeoXXX23DE]} there is no output. Same as above. But gesis is now right member.

New Experiment as in ISEM paper

- Done for ISEM paper

- # Datasets

- Triples

- # Lookups?

- Observation?

- Time for SPARQL over Qcrumb

- Building of MDM

- Serialising MDM with XML/SQL

- MDX query processing

For LDCX we distinguished

- Loading and validating a dataset (load URIs, run normalisation, run integrity constraint checks)

- Generating logical query plan (parse MDX, transform MDX parse tree, run metadata queries after loading dataset)

- Executing the logical query plan and returning the results (generating and executing physical query plan, caching results)

ISEM More time:

- Querying metadata and data

- Building MDM, serialising MDM

Less time:

- Query processing using OLAP engine

LDCX Less time:

- Loading and validating a dataset

More time:

- Generating logical query plan

- Generating physical query plan

- Executing physical query plan

Plan to monitor for each experiment

- Datasets (from ""

- Observations? (from "")

- Triples (from "")

- Lookups? (from "")

- Loading and validating datasets (load URIs, run normalisation, run integrity constraint checks)

- Generating logical query plan (parse MDX, transform MDX parse tree, run metadata queries after loading dataset, entity consolidation for metadata queries)

- Generating physical query plan (consider equivalence classes, create SPARQL query)

- Executing physical query (execute SPARQL query, caching results)

- Evaluate Drill-Across of olap4ld with equivalence relationships in Linked Data:

- Simply repeat experiment from ISEM, and explain difficulties.

- Correctly computed comparison of Employment Fear vs. Real GDP Growth Rate.

- Growth rate:

- http://epp.eurostat.ec.europa.eu/tgm/table.do?tab=table&init=1&plugin=1&language=en&pcode=tec00115 (not tsieb020, anymore, renamed? See number of triples)

- http://estatwrap.ontologycentral.com/data/tec00115

- Integration/Entity consolidation of gesis:geo and estatwrap:geo as well as gesis-geo:00 and estatwrap-geo:DE included.

- No complex measure included.

Logical query plan: Drill-across(

Rollup (Slice (Dice (Projection (BaseCube (http://estatwrap.ontologycentral.com/id/tec00115#ds), {http://purl.org/linked-data/sdmx/2009/measure#obsValuehttp://estatwrap.ontologycentral.com/id/tec00115#dsAGGFUNCAVG}), (http://lod.gesis.org/lodpilot/ALLBUS/vocab.rdf#geo = http://lod.gesis.org/lodpilot/ALLBUS/geo.rdf#00)), {http://lod.gesis.org/lodpilot/ALLBUS/vocab.rdf#geo, http://ontologycentral.com/2009/01/eurostat/ns#unit}), {}),

Rollup (Slice (Dice (Projection (BaseCube (http://lod.gesis.org/lodpilot/ALLBUS/ZA4570v590.rdf#ds), {http://purl.org/linked-data/sdmx/2009/measure#obsValuehttp://lod.gesis.org/lodpilot/ALLBUS/ZA4570v590.rdf#dsAGGFUNCAVG}), (http://lod.gesis.org/lodpilot/ALLBUS/vocab.rdf#geo = http://lod.gesis.org/lodpilot/ALLBUS/geo.rdf#00)), {http://lod.gesis.org/lodpilot/ALLBUS/vocab.rdf#geo, http://lod.gesis.org/lodpilot/ALLBUS/vocab.rdf#variable}), {}))

New experiments: DrillAcross GdpEmployment

What we track from Log File

LDCX_Query_Attributes.QUERYTIME, LDCX_Query_Attributes.QUERYNAME, LDCX_Query_Attributes.DATASETSCOUNT, LDCX_Query_Attributes.TRIPLESCOUNT, LDCX_Query_Attributes.OBSERVATIONSCOUNT, LDCX_Query_Attributes.LOOKUPSCOUNT, LDCX_Query_Attributes.LOADVALIDATEDATASETSTIME, LDCX_Query_Attributes.EXECUTEMETADATAQUERIESTIME, LDCX_Query_Attributes.EXECUTEMETADATAQUERIESCOUNT, LDCX_Query_Attributes.GENERATELOGICALQUERYPLANTIME, LDCX_Query_Attributes.GENERATEPHYSICALQUERYPLANTIME, LDCX_Query_Attributes.EXECUTEPHYSICALQUERYPLAN

Sqlite query for average over relevant metrics:

sqlite3 /home/benedikt/Workspaces/Git-Repositories/olap4ld/OLAP4LD-trunk/testresources/bottleneck.db 'select querytime,queryname,triplescount,avg(loadvalidatedatasetstime),avg(executemetadataqueriestime),avg(generatelogicalqueryplantime),avg(generatephysicalqueryplantime),avg(executephysicalqueryplantime) from bottleneck where queryname like "%test%" group by queryname,triplescount order by triplescount, queryname desc'

Experiment:

system|runid|nr|system_name|querytime|queryname|datasetscount|triplescount|observationscount|lookupscount|loadvalidatedatasetstime|executemetadataqueriestime|executemetadataqueriescount|generatelogicalqueryplantime|generatephysicalqueryplantime|executephysicalqueryplantime |2014-05-05/16:38:47|0|bottleneck|May 5, 2014 4:36:12 PM | testGdpEmployment |2|3103|360|10|220|4619|28|1579|30|1 |2014-05-05/16:38:47|0|bottleneck|May 5, 2014 4:37:59 PM | testGdpEmployment |2|3103|360|10|234|4923|28|1860|35|0 |2014-05-05/16:38:47|0|bottleneck|May 5, 2014 4:38:13 PM | testGdpEmployment |2|3103|360|10|208|4725|28|1739|32|1 |2014-05-05/16:38:47|0|bottleneck|May 5, 2014 4:38:06 PM | testGdpEmployment |2|3103|360|10|219|4835|28|1616|35|1 |2014-05-05/16:38:47|0|bottleneck|May 5, 2014 4:37:44 PM | testGdpEmployment |2|3103|360|10|629|9201|28|2416|87|2 |2014-05-05/16:38:47|0|bottleneck|May 5, 2014 4:37:52 PM | testGdpEmployment |2|3103|360|10|295|5576|28|1802|37|1

Average:

querytime|queryname|triplescount|avg(loadvalidatedatasetstime)|avg(executemetadataqueriestime)|avg(generatelogicalqueryplantime)|avg(generatephysicalqueryplantime)|avg(executephysicalqueryplantime) May 5, 2014 4:37:52 PM | testGdpEmployment |3103|300.833333333333|5646.5|1835.33333333333|42.6666666666667|1.0

XXX: Experiments

- New version of LDCX that allows Drill-Across with equivalence relationships in Linked Data:

- Robust, no funky error in case a Dimension is chosen that is not contained in both. Maybe have outer-join?

- Drill-Across: GESIS - Eurostat

- Drill-Across: Eurostat

- Drill-Across: Edgar - Yahoo! Finance

LogicalToPhysical Query Plan Strategy

- Short: First used Vistor Pattern, now use Depth-first strategy

==2014-07-07==

* Filtering for duplicates at getDimensions(), getHierarchies()... on all "unique" attributes,

now that we have duplication strategy.

* Problem: I have never combined OLAP-to-SPARQL with Convert-Cube/Merge-Cubes.

* Solution: Why can I not implement Convert-Cube/Merge-Cubes using SPARQL in an extended OLAP-to-SPARQL

algorithm? Since 1) Convert-Cube/Merge-Cubes represent "implicit" datasets that I may want to materialise

for faster query processing, 2) whereas Projection, Dice, Slice are issued by the user explicitly,

Convert-Cube / Merge-Cubes are run automatically by the system to increase the number of answers to

an OLAP query (OLAP-to-SPARQL query is fixed on one dataset, OLAP query issues drill-across over all

possible datasets, returning several answers to a query.

* To do OLAP-to-SPARQL over Convert-Cube/Merge-Cubes, I need to execute Convert-Cube/Merge-Cubes separately

and only feed the data cube URI.

Problem:

What operators do we have:

BaseCube

Projection

Dice

Slice

Roll-Up

Drill-Across

What iterators do we have:

GetCubesSparqlIterator <= Not implemented - at all.

OlapDrillAcross2JoinSesameVisitor

DrillAcrossNestedLoopJoinSesameIterator <= works over every iterator that returns tuples: Olap2SparqlAlgorithmSesameIterator (OLAP-to-SPARQL), Convert-Cube (ConvertSparqlDerivedDatasetIterator), Base-Cube (BaseCubeSparqlDerivedDatasetIterator). Requires 2 input iterators (that return tuples).

Iterator x Iterator -> Tuples

For query trees without Drill-Across and Convert-Cube

* Olap2SparqlSesameVisitor

Olap2SparqlAlgorithmSesameIterator <= OLAP-to-SPARQL algorithm. Works only over engine that provides SPARQL functionality + No input iterators.

Engine -> Tuples

For query trees with Convert-Cube / Base-Cube

* Olap2SparqlSesameDerivedDatasetVisitor

BaseCubeSparqlDerivedDatasetIterator <= Works, but simply returns tuple representation (since data is already loaded by then from: calling getCubes on EmbeddedSesameEngine). Requires repository + No input iterators.

(Repo) -> Tuples (Repo)

ConvertSparqlDerivedDatasetIterator <= Convert-Cube implementation. Requires repository + 1-2 input iterators.

Iterator x Iterator (Repo) -> Tuples (Repo)

SliceSparqlDerivedDatasetIterator <= Not implemented, yet.

DrillAcrossSparqlDerivedDatasetIterator <= Not implemented, yet: Two datasets are joined. For now, just return the first

Solution:

* Make BaseCube only gets QB dataset URI *todo still, errors*

* Make BaseCubeSparqlDerivedDatasetIterator to only get QB dataset URI

(Engine) -> Tuple (Engine)

* Make Olap2SparqlAlgorithmSesameIterator to take in engine and iterator for cube name.

Iterator (Engine) -> Tuple (Engine)

* Leave ConvertSparqlDerivedDatasetIterator

Iterator x Iterator (Engine) -> Tuples (Engine)

* Reduce number of visitors?

** Only works with LQPs that have 1) any number of Drill-Across at the beginning (for joining, comparison, merging with DrillAcrossNestedLoopJoinSesameIterator), 2) one set of Projection, Dice, Slice, Roll-Up (for OLAP operations with Olap2SparqlAlgorithmSesameIterator), 3) any number of Convert-Cube / Merge-Cubes (for ConvertSparqlDerivedDatasetIterator), and 4) any number of Base-Cube (for BaseCubeSparqlDerivedDatasetIterator).

** Convert-Cube / Merge-Cubes / Base-Cube may be added automatically.

Then, we can have DrillAcrossNestedLoopJoinSesameIterator -> Olap2SparqlAlgorithmSesameIterator -> ConvertSparqlDerivedDatasetIterator -> BaseCubeSparqlDerivedDatasetIterator.

Before, only ConvertSparqlDerivedDatasetIterator -> BaseCubeSparqlDerivedDatasetIterator OR ConvertSparqlDerivedDatasetIterator -> ConvertSparqlDerivedDatasetIterator OR DrillAcrossNestedLoopJoinSesameIterator on top of everything (yes, Drill-Across worked for both kinds of trees) was possible.

==Problem: How to build the Visitor? How to create Phyical Iterator Plan?==

* How will the Logical Query Plan look like? 1) any number of Drill-Across at the beginning (for joining, comparison, merging with DrillAcrossNestedLoopJoinSesameIterator), 2) one set of Projection, Dice, Slice, Roll-Up (for OLAP operations with Olap2SparqlAlgorithmSesameIterator), 3) any number of Convert-Cube / Merge-Cubes (for ConvertSparqlDerivedDatasetIterator), and 4) any number of Base-Cube (for BaseCubeSparqlDerivedDatasetIterator).

** Convert-Cube / Merge-Cubes / Base-Cube may be added automatically.

* How about:

** Visitor Pattern

** If Drill-Across: Recursive Application of new Visitor Pattern, taking in two Iterators that are connected in a new Iterator (DrillAcrossNestedLoopJoinSesameIterator)

** If Projection, Dice, Slice, Roll-Up: Collection of information from these operators.

** If Base-Cube: Using dataseturi and connecting a new BaseCubeSparqlDerivedDatasetIterator to one or more ConvertSparqlDerivedDatasetIterator Olap2SparqlAlgorithmSesameIterator

** If Convert-Cube:

Collection of information from these operators.

One possibility: Go through tree. For Convert-Cube / Base-Cube use Olap2SparqlSesameDerivedDatasetVisitor and for all else use Olap2SparqlSesameVisitor and each result add to iterator list that then will be drilled-across with own Iterator.

One could keep that assuming that after Convert-Cube and Base-Cube only Convert-Cube or Base-Cube can show up.

However, what about Olap2SparqlSesameVisitor? For Convert-Cube / Base-Cube, one could use Olap2SparqlSesameDerivedDatasetVisitor and the result will then be used as input to the OLAP-to-SPARQL iterator.

Try it!

Problem: Iterators need SPARQL functionality from the LDE. *implemented, refactored*

Can I go depth-first through the logical query plan to "build" the physical query plan?

Pseudo-code:

compile(Node node) {

Iterator theIterator;

if (node instanceof Drill-Across) {

//recursively go through children

Iterator iterator1 = compile(node.getInput1());

Iterator iterator2 = compile(node.getInput2());

theIterator = new Drill-Across-Iterator(iterator1, iterator2);

}

if (node instanceof Roll-Up, Slice, Dice, Projection) {

//recursively go through cildren

store data about Roll-Up, Slice, Dice, Projection;

if (node.getInput() instanceof Base-Cube/Convert-Cube) {

Iterator iterator1 = compile(node.getInput());

theIterator = new OLAP-to-SPARQL(iterator1)

} else {

theIterator1 = compile(node.getInput());

}

}

if (node instanceof Convert-Cube) {

//recursively

Iterator iterator1 = compile(node.getInput1());

Iterator iterator2 = compile(node.getInput2());

theIterator = new Convert-Cube-Iterator(iterator1, iterator2);

}

if (node instanceof Base-Cube) {

theIterator = new Base-Cube-Iterator(dataseturi)

}

return theIterator;

}

Default Measure Strategies

- Per default for a dataset, we define the following measures:

- In case no aggregation function is defined:

- The measure itself with the GROUP_CONCAT aggregation function: "sdmx-measure:obsValue"

- Dataset-independent measures for AVG, SUM, COUNT with "sdmx-measure:obsValueAVG"

- Dataset-dependent measures for AVG, SUM, COUNT with "sdmx-measure:obsValuehttps://.../AVG"

- In case an aggregation function is defined: "sdmx-measure:obsValue"

- In case no aggregation function is defined: